Global organizations maintain an unquantifiable amount of inaccessible files which contain pertinent inaccessible metadata. Inaccessible data repositories are mostly ‘out of sight – out of mind’, with organizations not knowing the exact volume or value contained within these repositories.

There is significant value that can be retained in these repositories without redrafting documents, which can prove to be an expensive affair. There is no value in retaining data that is not utilized. One aspect of releasing the value is to normalize files. This process entails bringing them up to current technology standards.

Organizational challenge

Unfortunately, poor quality of information can only worsen over time. Organizations, today, are facing the very real challenge of identifying files contained within structured and unstructured environments. These may be inaccessible and illegible for several reasons, including but not limited to:

Data wrangling services

Advances in technology today are helping organizations unlock hidden value. Businesses are finding that data wrangling techniques, coupled with artificial intelligence, leads journey to unlocking and harnessing trapped metadata.

Data wrangling normalization methods, approaches and techniques are designed to specifically prevent destructive impact i.e. prevent the loss of data. For example, where the original data is moderately good, normalization techniques gently fine-tune data to ensure that raw data is not drastically affected. Further, where original data is of poorer quality, (e.g. extremely dark background) advanced techniques separate the elements for individual treatment and then recombine, thereby resulting in an optimal update.

Data wrangling approach

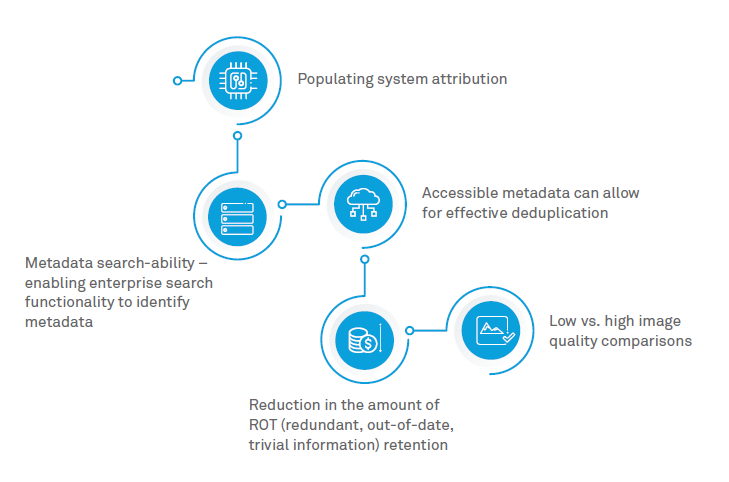

Organizations require a solution that is tailored to their needs without compromising the integrity of files and associated metadata. Specialized, normalising, pre-processing data wrangling techniques addresses the following:

The result

Normalising data releases value which was once illegible, enabling accurate and quicker decision-making within the organisation by maximising data’s search-ability.

Data wrangling features need to be developed and based on an intimate understanding of the inherent difficulties associated with normalizing files. This is the first step to releasing hidden value, which can in turn contribute towards:

Janine Murray

Consulting Practice of Energy, Natural Resources,

Utilities, Engineering and Construction

Janine Murray is an IM Consultant with over 15 years of experience in the O&G industry. She has extensive FE/Operations and Major Capital Project (MCP) Information Management experience. She has extensive experience in IM brownfield modifications, greenfield enhancements, MCP joint ventures, Closeout, and MCP handover to Operations. Additionally, she is experienced in document cleansing and data extraction techniques for digitizing O&G legacy assets.

She can be reached at: janine.murray@wipro.com

Unlike conventional computers, artificial intelligence is not programmed. On the one hand, like humans, AI learns from information. Yet on the other, it learns far faster than humanly possible. Machine learning (ML) enables AI to make a correlation between a pattern and an outcome, formulate a hypothesis, take action and then integrate that feedback into its next hypothesis. AI continuously learns, with the goal of predicting future outcomes and events with greater accuracy.

An omni channel approach is becoming an unavoidable imperative for petroleum retailers to enhance their flexibility and scalability of operation, provide superior value proposition with respect to competitors and edge ahead on newer avenues for growth.

IT / OT Convergence is a driving industry trend that bridges IT and OT data and platforms in near real-time enabling visibility and business process reinvention across the enterprise